Mixtral 8x7B: Mistral AI's Triumph Over GPT-3.5, Changing the AI Landscape

In an era where AI tools are reshaping our world, Mistral AI’s Mixtral 8x7B emerges as a groundbreaking development, setting new standards in the field of artificial intelligence. This innovative AI model, with its unique “Mixture of Experts” architecture, not only challenges the capabilities of existing tools like OpenAI’s GPT-3.5 but also redefines what we can expect from AI in terms of efficiency, versatility, and accessibility. Mixtral 8x7B stands at the forefront of this technological revolution, offering advanced language and code generation abilities, exceptional multilingual support, and a user-friendly experience that democratizes AI technology.

Mistral AI’s Mixtral 8x7B surpasses existing AI models with its unique architecture, excelling in language and code generation, and offering extensive multilingual support.Its future developments promise to impact AI research and various industries significantly.

Table of Contents

What is Mistral AI?

Background of the Company

Mistral AI, a beacon of innovation in the field of artificial intelligence, was founded in May 2023. This French startup emerged from the vision of a group of AI enthusiasts and experts, determined to make a significant impact in the rapidly evolving world of AI. The founders, with a blend of expertise in technology, business, and academia, aimed to create a company that not only pushes the boundaries of AI capabilities but also adheres to ethical standards in AI development.

Achievements and Milestones

Since its inception, Mistral AI has been on a meteoric rise. In June 2023, the company made headlines by securing a record-breaking seed funding of $118 million, a testament to the confidence investors have in its vision and technology. This funding round, one of the largest in European history for a startup, was remarkable not just for its size but also because it was achieved with just a 7-page pitch deck. By the end of the same year, Mistral AI’s valuation soared to approximately $2 billion, further solidifying its position as a leading player in the AI industry.

Mistral AI in the AI Community

Mistral AI has quickly established itself as a respected and influential entity in the AI community. Its approach to AI development, focusing on efficiency, cost-effectiveness, and open-source principles, has set it apart from its competitors. The company’s commitment to an open, responsible, and decentralized approach to technology resonates strongly within the AI community, especially among those advocating for more transparency and accessibility in AI. Mistral AI’s release of Mixtral 8x7B, an open-source model, further exemplifies this commitment, challenging the norms of AI development and distribution.

What is Mixtral 8x7b?

Design and Architecture

Mixtral 8x7B, a groundbreaking AI model developed by Mistral AI, represents a significant leap in the field of large language models. Its design is centered around a unique “Mixture of Experts” (MoE) architecture. This innovative approach involves integrating multiple smaller models, each specializing in different tasks, to create a more efficient and powerful system. Unlike traditional monolithic models, Mixtral 8x7B’s architecture allows for more dynamic and flexible learning, as each ‘expert’ in the model focuses on specific areas of knowledge or types of tasks, leading to more nuanced and accurate outputs.

Technical Specifications

The Mixtral 8x7B model is characterized by its relatively compact size yet powerful performance. It features 8 expert models, each with 7 billion parameters, summing up to an estimated total of 42 billion parameters. This is a stark contrast to larger models like GPT-4, which have significantly more parameters. Despite its smaller size, Mixtral 8x7B does not compromise on capability. It maintains a 32K token context window, similar to GPT-4, allowing for extensive understanding and generation of longer text sequences. The model’s efficiency is further enhanced by its ability to run on non-GPU devices, making advanced AI technology more accessible.

Comparative Analysis with Other Models

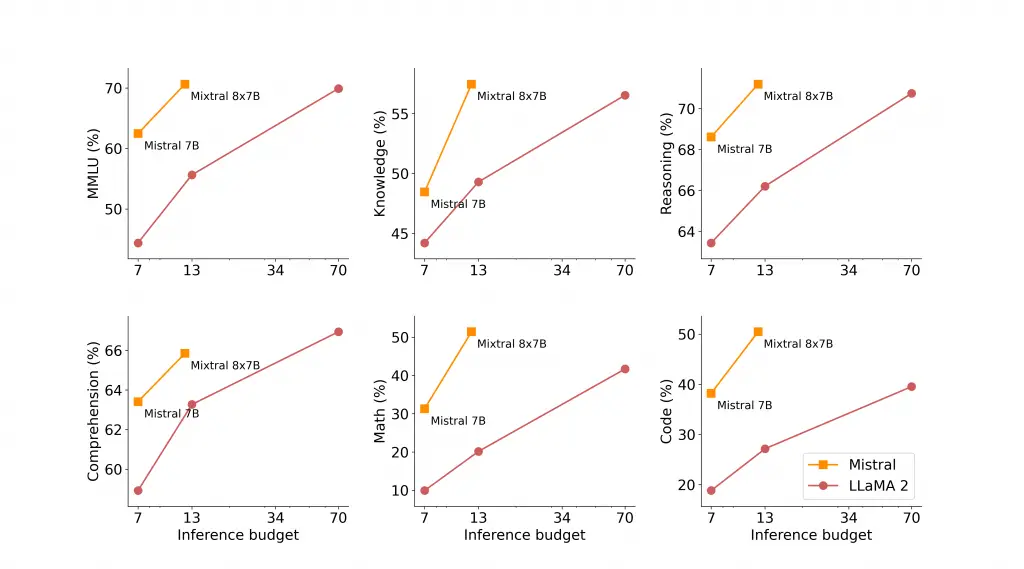

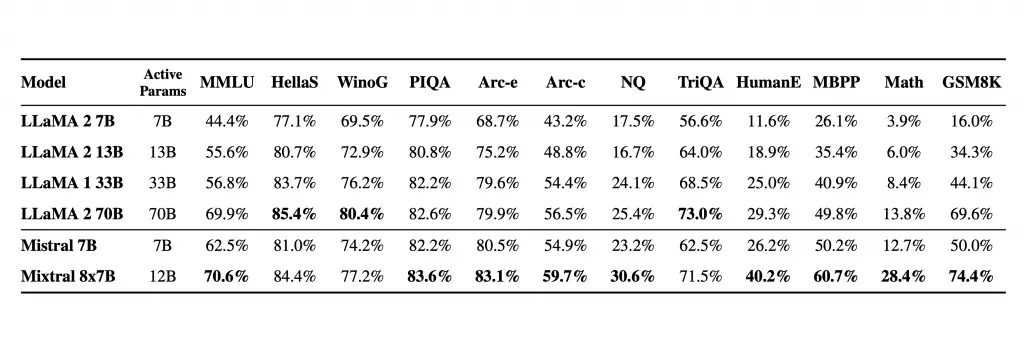

When compared to other models in the market, such as OpenAI’s GPT-3.5 and Meta’s Llama 2, Mixtral 8x7B stands out in several ways. Firstly, its unique MoE architecture provides a balance between size and performance, making it a more practical option for various applications. In terms of speed, Mixtral 8x7B has shown to be significantly faster, with inference speeds up to six times quicker than Llama 2 70B. This efficiency is a result of its sparse model architecture and the use of eight different feedforward blocks in the Transformer. Additionally, Mixtral 8x7B’s open-source nature contrasts with the closed-source approach of models like GPT-3.5, offering a more collaborative and transparent option in the AI community.

Functions of Mixtral 8x7b

Mixtral 8x7B, developed by Mistral AI, is a versatile and powerful AI model that offers a range of functionalities. Its unique architecture and design enable it to excel in various applications, making it a valuable tool in numerous fields. Below are some key functions of Mixtral 8x7B, each described with an unordered list to highlight their features and capabilities.

Language Generation

- Contextual Understanding: Exceptional at understanding and generating contextually relevant text.

- Narrative Creation: Capable of crafting intricate narratives with a high degree of coherence.

- Long-Form Content: Excelling in generating long-form content without losing context or coherence.

Code Generation and Technical Applications

- Programming Assistance: Aids in writing, debugging, and suggesting improvements in code across various programming languages.

- Data Analysis: Capable of interpreting complex datasets and providing insightful analyses.

- Scientific Research Support: Assists in hypothesis generation and literature review in scientific research.

Multilingual Capabilities

- Language Support: Supports multiple languages, facilitating global communication and content creation.

- Cross-Cultural Understanding: Demonstrates an understanding of cultural nuances in different languages.

- Translation and Localization: Efficient in translating and localizing content, making it useful for international businesses and organizations.

Advanced Learning and Adaptability

- Continuous Learning: Ability to learn from new data and improve over time.

- Adaptability: Can adapt to various domains and industries, providing tailored outputs.

- User Feedback Incorporation: Capable of incorporating user feedback to refine its performance and outputs.

Accessibility and User-Friendly Interface

- Ease of Use: Designed with a user-friendly interface for both experts and novices.

- Broad Accessibility: Runs on non-GPU devices, making it accessible to a wider audience.

- Comprehensive Documentation: Well-documented, with resources available for users to understand and utilize the model effectively.

Mixtral 8x7B vs GPT-3.5

Mixtral 8x7B vs GPT-3.5

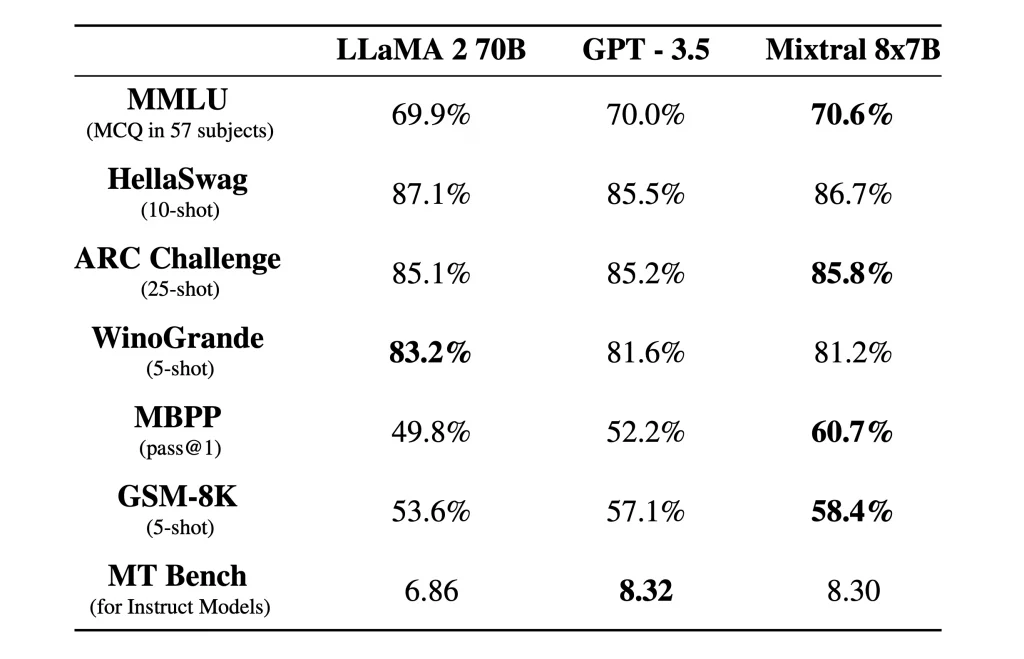

Performance Comparison

- Speed: Mixtral 8x7B is reported to have an inference speed six times faster than Llama 2 70B, which suggests a significant speed advantage over GPT-3.5 as well.

- Language Generation: Both models excel in language generation, but Mixtral 8x7B shows particular strength in handling long contexts.

- Code Generation: Mixtral 8x7B is noted for its excellent code generation capabilities, a key area of comparison with GPT-3.5.

- Hardware Requirements: Mixtral 8x7B can run on non-GPU devices, including Apple Mac computers, making it more accessible than GPT-3.5, which requires more robust hardware.

Technological Innovations

- Architecture: Mixtral 8x7B employs a “mixture of experts” approach, integrating various models each specializing in different tasks, contrasting with GPT-3.5’s more traditional structure.

- Model Size and Efficiency: Despite having fewer parameters, Mixtral 8x7B achieves comparable or superior performance, highlighting its efficiency.

- Open-Source Nature: Unlike GPT-3.5’s closed-source approach, Mixtral 8x7B is open-source, aligning with Mistral’s commitment to an open, responsible, and decentralized approach to technology.

Market Impact and Industry Reception

- Adoption and Flexibility: AI enthusiasts and professionals have quickly adopted Mixtral 8x7B, impressed by its performance and flexibility.

- Safety Concerns: The lack of safety guardrails in Mixtral 8x7B, as observed by experts, contrasts with GPT-3.5 and raises concerns about content deemed unsafe by other models.

- Valuation and Funding: Mistral’s rapid growth, with a valuation soaring to over $2 billion, highlights the market’s recognition of the importance of models like Mixtral 8x7B.

User Experience and Accessibility of Mixtral 8x7B

Ease of Use and Integration

- Intuitive Interface: The model is equipped with an intuitive interface, simplifying interactions for both novice and experienced users. This ease of interaction makes it more approachable for a broader range of users, from AI researchers to hobbyists.

- Seamless Integration: Mixtral 8x7B is designed for seamless integration with existing systems and platforms. Its compatibility with common programming languages and frameworks reduces the learning curve and integration time for developers.

- Comprehensive Documentation: The availability of detailed documentation and tutorials aids users in understanding and utilizing the model effectively. This support is crucial for encouraging experimentation and innovation among users.

Accessibility and System Requirements

- Non-GPU Compatibility: Unlike many large AI models that require powerful GPU-based systems, Mixtral 8x7B can run on non-GPU devices, including standard laptops and desktops. This feature significantly lowers the barrier to entry for individuals and organizations without access to high-end computing resources.

- Resource Efficiency: The model’s efficient design means it requires less computational power to operate effectively. This efficiency not only makes it more accessible but also more sustainable, as it consumes less energy.

- Open-Source Availability: Being open-source, Mixtral 8x7B is freely available for download and modification. This openness fosters a collaborative environment where users can share improvements and adaptations, further enhancing the model’s accessibility and applicability in various contexts.

Ethical Considerations and Safety

Ethical AI Development

Mistral AI’s approach to the development of Mixtral 8x7B is deeply rooted in ethical considerations. Recognizing the profound impact AI can have on society, the company has implemented several strategies to ensure ethical compliance. Firstly, transparency is a cornerstone of their approach. By making Mixtral 8x7B open-source, Mistral AI invites scrutiny and collaboration, ensuring that a wide range of perspectives are considered in its development. Additionally, the company is committed to mitigating biases in AI. This involves using diverse datasets and continually refining algorithms to reduce any inherent prejudices. Mistral AI also emphasizes the importance of responsible AI usage, advocating for the ethical application of their technology in various industries. This holistic approach to ethical AI development reflects a commitment to creating technology that is not only advanced but also aligns with societal values and norms.

Safety Measures and Concerns

While Mixtral 8x7B represents a significant advancement in AI technology, it also brings forth certain safety concerns. One of the primary issues is the model’s open-source nature, which, while promoting transparency and collaboration, also raises questions about content moderation and misuse. Without built-in safety guardrails, there is a potential risk of the model generating harmful or unsafe content. To address this, Mistral AI encourages a community-driven approach to safety, where users and developers actively participate in identifying and mitigating risks. The company also emphasizes the importance of continuous monitoring and updating of the model to ensure it adheres to evolving ethical standards and societal expectations. However, the responsibility for safe and ethical usage largely falls on the users, underscoring the need for awareness and vigilance in the AI community.

Future Prospects and Developments

Roadmap and Future Plans

Mistral AI has outlined an ambitious roadmap for the future development of Mixtral 8x7B, reflecting their commitment to continuous innovation. In the near term, the company plans to enhance the model’s capabilities in language understanding and generation, aiming to set new benchmarks in natural language processing. There is also a focus on expanding the model’s multilingual capabilities, aiming to make it a truly global AI tool. Long-term plans include integrating more advanced machine learning techniques, such as reinforcement learning, to further enhance the model’s adaptability and performance. Mistral AI is also exploring partnerships with academic and research institutions to foster innovation and stay at the forefront of AI technology. These future developments are not just technical upgrades; they represent Mistral AI’s vision of shaping the future of AI in a way that is ethical, accessible, and transformative.

Potential Impact on AI Research and Industry

The potential impact of Mixtral 8x7B on AI research and the broader industry is immense. As the model continues to evolve, it is expected to drive significant advancements in various fields, from healthcare and finance to education and entertainment. Its ability to process and generate language with high accuracy and context awareness could revolutionize how we interact with technology, making AI interfaces more intuitive and human-like. In the realm of AI research, Mixtral 8x7B could become a valuable tool for exploring new frontiers in machine learning and AI ethics. Its open-source nature will likely stimulate a wave of collaborative research, leading to faster innovations and breakthroughs. Additionally, Mixtral 8x7B’s accessibility could democratize AI technology, enabling smaller businesses and developers to leverage advanced AI capabilities, thus fostering a more inclusive and diverse AI ecosystem.

Conclusion

In conclusion, Mistral AI’s Mixtral 8x7B represents a significant leap forward in the realm of artificial intelligence. With its innovative “Mixture of Experts” architecture, impressive language and code generation capabilities, and groundbreaking multilingual support, Mixtral 8x7B stands out as a versatile and powerful AI model. Its comparison with GPT-3.5 highlights its superior speed, efficiency, and accessibility, marking a new era in AI technology. While it brings forth new possibilities, it also raises important ethical considerations and safety concerns, emphasizing the need for responsible development and usage. Looking ahead, the future of Mixtral 8x7B is bright, with potential impacts that extend far beyond the current AI landscape, promising to revolutionize various industries and AI research. Mistral AI’s commitment to continuous innovation and ethical AI development positions Mixtral 8x7B not just as a technological marvel, but as a catalyst for positive change in the AI community and society at large.