The Taylor Swift AI Outrage: Fans Rally Against Deepfake Dilemma

In an era where artificial intelligence (AI) tools are becoming increasingly sophisticated, the digital world faces new challenges and ethical dilemmas. One such challenge is the emergence of AI-generated explicit images, a trend that has recently come into the spotlight with the case of Taylor Swift. Known as “Taylor Swift AI,” these images, created by advanced AI technologies, have rapidly spread across social media, sparking widespread controversy and concern. This phenomenon not only highlights the capabilities of AI tools in generating convincingly realistic content but also raises critical questions about privacy, consent, and the ethical use of technology in the digital age.

The rapid spread of AI-generated explicit images, particularly of Taylor Swift, has raised significant concerns about the ethical use of AI tools in creating content.

Table of Contents

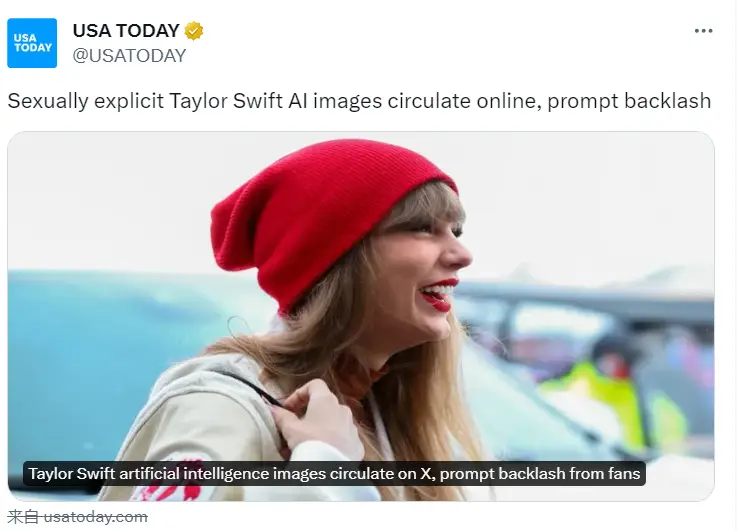

Rapid Spread of AI-Generated Explicit Images on Social Media

The digital landscape was recently shaken by a disturbing trend involving the rapid spread of AI-generated explicit images, particularly those of the globally renowned pop star, Taylor Swift. These images, created using advanced artificial intelligence technologies, surfaced across various social media platforms, garnering widespread attention. The photos, which depicted Swift in sexually suggestive and explicit poses, quickly went viral, being viewed tens of millions of times. This incident not only highlights the pervasive reach of social media but also underscores the alarming speed at which such content can circulate globally.

Despite efforts by social media platforms to remove these images, the nature of the internet means that nothing is ever truly gone. Many of these images continue to be shared on less regulated channels, raising significant concerns about digital consent and the ethical use of AI technology. The incident has sparked a broader conversation about the potential harm caused by AI-image-generated content, especially when it targets public figures without their consent. It also brings to light the challenges faced by social media companies in effectively moderating such content, as they struggle to keep up with the rapid advancement and accessibility of AI tools.

What is “Taylor Swift AI”?

The term “Taylor Swift AI” refers to a series of artificial intelligence-generated images that depict the famous singer, Taylor Swift, in various fabricated scenarios. These images are created using advanced AI technologies that can generate realistic photos based on text prompts or existing images. In this case, the AI was used to produce explicit and suggestive images of Swift, none of which were real or consented to by the singer. This misuse of AI technology for creating non-consensual imagery of celebrities has raised serious concerns about privacy, consent, and the ethical implications of AI in the realm of digital media.

What is Deepfake?

Deepfake is a technology that uses artificial intelligence, specifically deep learning algorithms, to create highly convincing fake videos and images. It involves superimposing existing images and videos onto source images or videos using a machine learning technique known as a generative adversarial network (GAN). Deepfakes are particularly concerning because they can be used to create realistic-looking footage of individuals saying or doing things they never actually did, leading to potential misinformation, defamation, and other forms of digital manipulation. The technology poses significant challenges in terms of content verification, privacy rights, and the spread of false information.

Why is “Taylor Swift AI” so Popular?

The popularity of “Taylor Swift AI” images can be attributed to several factors, particularly in the context of today’s digital media landscape.

- Celebrity Influence: Taylor Swift is a global superstar with a massive fan base. Anything associated with her, even AI-generated content, naturally garners significant attention.

- Technological Curiosity: The advancement of AI technology, especially in creating realistic images, fascinates many people, leading to a heightened interest in AI-generated content.

- Viral Nature of Social Media: Social media platforms are designed to amplify content that generates strong reactions, and the controversial nature of these images makes them more likely to go viral.

- Sensationalism: The explicit nature of the images adds a sensationalist element, which often attracts more viewers and shares in the online world.

- Ethical and Legal Discussions: The creation and distribution of such images have sparked important conversations about ethics and legality in AI, drawing more public and media attention.

- Fan Reactions: The response from Swift’s fans, ranging from outrage to efforts to suppress these images, has also played a role in amplifying their visibility.

The Viral Outcry: 'Protect Taylor Swift' Campaign on Social Media

Emergence of the Campaign

The ‘Protect Taylor Swift’ campaign emerged as a powerful response from fans and the general public to the circulation of AI-generated explicit images. This grassroots movement quickly gained momentum on social media platforms, particularly on X (formerly Twitter), where fans rallied to defend Swift’s dignity and privacy. The campaign’s rapid spread highlighted the strength and solidarity of Swift’s fan base, as well as the broader community’s stance against non-consensual digital content.

Impact on Public Awareness

The campaign significantly raised public awareness about the ethical issues surrounding deepfake technology and AI-generated content. It sparked widespread discussions on consent, digital rights, and the potential harms of unchecked AI use. The ‘Protect Taylor Swift’ movement transcended being just a fan-driven initiative, evolving into a broader social commentary on the need for responsible AI usage and digital ethics.

Response from Social Media Platforms

Social media platforms, including X, faced scrutiny over their content moderation policies in light of the campaign. The incident exposed the challenges these platforms face in controlling the spread of AI-generated content and deepfakes. Despite policies against deceptive media, the platforms struggled to keep up with the rapid dissemination of these images, highlighting a growing concern in digital governance.

X Can’t Stop Spread of Explicit, Fake AI Taylor Swift Images

Limitations of Content Moderation

X’s inability to fully stop the spread of explicit, fake AI images of Taylor Swift underscores the limitations of current content moderation systems. Despite having policies against synthetic and manipulated media, the platform’s reliance on automated systems and user reporting proved insufficient in curbing the rapid spread of these images, raising questions about the effectiveness of existing moderation strategies.

Role of Automated Systems

X’s heavy reliance on automated systems for content moderation came under criticism during this incident. While these systems are essential for handling the vast amount of content on the platform, they often lack the nuanced understanding required to identify and manage deepfake content effectively. This reliance has led to calls for more robust and sophisticated AI solutions, as well as a greater human oversight in content moderation.

Legal and Ethical Implications

The spread of fake AI images on X has highlighted significant legal and ethical implications. It raises concerns about the rights of individuals in the digital space, especially public figures, and the potential for harm through digital impersonation. This situation has prompted discussions among lawmakers, tech companies, and civil society about the need for stricter regulations and ethical guidelines for AI-generated content.

Content Moderation Challenges and the Rise of AI Tools

The Struggle of Social Media Platforms

Social media platforms are increasingly grappling with the challenge of moderating AI-generated content. As AI tools become more sophisticated and accessible, platforms like X (formerly Twitter) find themselves in a constant battle to identify and remove harmful content. This struggle highlights a significant gap in current content moderation capabilities, which are often unable to keep pace with the rapid evolution of AI technologies.

Balancing Act Between Freedom and Control

Content moderation on social media involves a delicate balance between protecting freedom of expression and preventing harm. Platforms are tasked with making nuanced decisions about what constitutes harmful content, often relying on AI tools that may lack the subtlety required for such judgments. This balancing act becomes even more complex with the introduction of AI-generated content, which blurs the lines between reality and fabrication.

Future of AI in Content Moderation

The rise of AI tools presents both a challenge and an opportunity for content moderation. While current AI systems struggle to effectively moderate deepfake content, there is potential for developing more advanced AI that can better detect and manage such content. This future direction points towards a more integrated approach, where AI not only creates content but also plays a crucial role in its ethical governance.

Public Outcry and Legislative Response to AI-Generated Celebrity Images

Mobilizing Public Sentiment

The spread of AI-generated images of celebrities like Taylor Swift has mobilized public sentiment, leading to widespread condemnation and calls for action. This public outcry reflects a growing awareness and concern about the potential misuse of AI technology, emphasizing the need for more responsible and ethical practices in the digital space.

Legislative Efforts and Challenges

In response to the public outcry, legislators have begun to introduce bills and regulations targeting the misuse of AI in creating non-consensual deepfake content. However, these legislative efforts face challenges, including defining the scope of regulation, ensuring the protection of free speech, and keeping up with the rapidly advancing technology.

The Role of Tech Companies and Self-Regulation

Tech companies are also under pressure to self-regulate and implement more effective measures against AI-generated deepfakes. This includes developing better content moderation tools, establishing clearer policies, and collaborating with legal and ethical experts. The role of these companies is crucial in shaping the future landscape of digital content and its ethical use.

Fighting Deepfake Cases

Case of Scarlett Johansson and Lisa AI

- Background: Scarlett Johansson faced a deepfake scenario where her likeness was used without consent in an advertisement for the AI image editor app, Lisa AI.

- Impact: This case raised serious concerns about the unauthorized use of celebrity images, leading to legal action by Johansson.

- Role of AntiFake: AntiFake could play a crucial role by preventing the creation of convincing deepfakes. Its signal scrambling technique would make it difficult for AI systems to replicate Johansson’s likeness accurately, thus protecting her identity and potentially avoiding legal disputes.

Twitch Streamer’s AI Bot Case

- Situation: Twitch streamer Susu launched an AI bot to let fans generate custom content, aiming to combat deepfakes.

- Concerns: The initiative, while well-intentioned, raised concerns about exacerbating parasocial relationships and potential AI misuse.

- AntiFake’s Contribution: AntiFake could offer a solution by ensuring that any content generated is protected. Its ability to alter signals would prevent the creation of unauthorized deepfakes, safeguarding both the streamer and the audience.

General Deepfake Challenges

- Widespread Issues: Deepfake technology has been used for creating fake celebrity videos, impersonating public figures, and spreading misinformation.

- Deepfake Detection: Current methods focus on identifying AI-generated content post-creation.

- Preventive Measures with AntiFake: AntiFake’s approach is preventive, stopping deepfakes at the source. By scrambling data before misuse, it provides a proactive defense, making it a valuable tool against deepfake abuses.

Conclusion

The “Taylor Swift AI” incident serves as a pivotal moment in our understanding of the implications of AI technology in society. It underscores the urgent need for effective content moderation, the development of ethical AI tools, and robust legal frameworks to address the challenges posed by deepfake technology. As we navigate this new digital landscape, it is crucial to balance innovation with ethical considerations, ensuring that technology serves the greater good without infringing on individual rights and freedoms. The collective response from fans, the public, and legislators to this incident reflects a growing awareness and demand for responsible use of AI, setting a precedent for future developments in the field.