Nightshade AI: New Data Poisoning Tool For Artists Against AI

In a revolutionary move to safeguard artistic creations, a new tool dubbed “Nightshade AI” has emerged, offering artists a unique defense mechanism against the unauthorized use of their work by AI companies. This development comes amid growing concerns over intellectual property rights and the unconsented use of artists’ work for AI training.

Table of Contents

What Is Nightshade AI?

Nightshade is an innovative data poisoning tool designed to protect artists from having their work exploited by generative AI models without permission. By subtly altering the pixels in digital artwork, Nightshade ensures that any AI trained on these modified images produces flawed and unusable outputs, effectively rendering the data useless for training purposes.

How Does Nightshade AI Work?

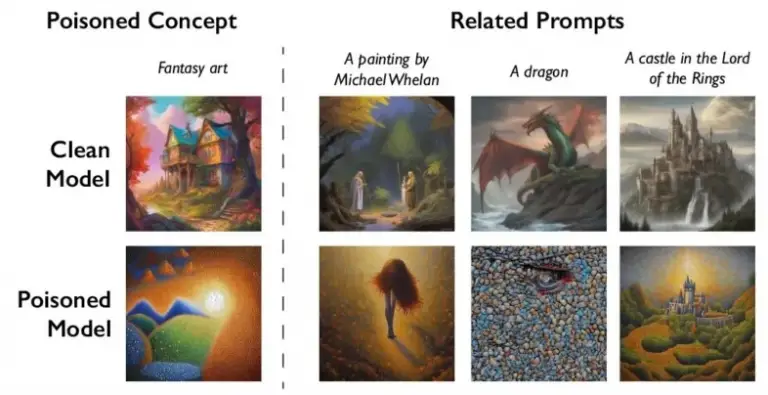

The tool introduces deliberate, invisible alterations to the pixels of digital images, which are imperceptible to the human eye but significantly distort the learning process of AI models. For instance, it can cause models to misinterpret images of certain objects consistently, leading to erroneous outputs. This “poisoning” acts as a deterrent, discouraging the scraping and unauthorized use of artists’ online portfolios for AI training datasets.

Who Creates Nightshade AI?

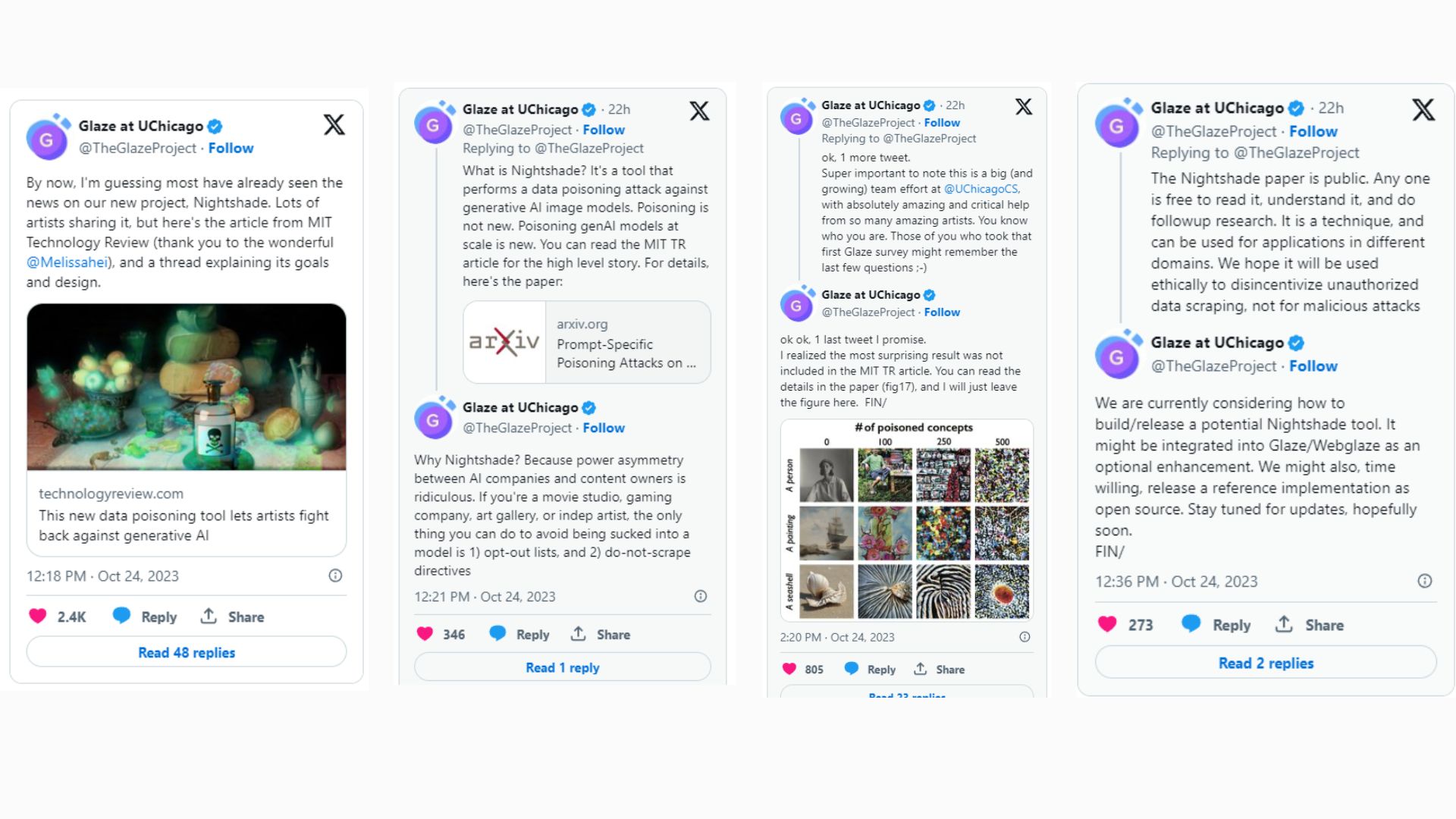

The brainchild of University of Chicago researchers led by computer science professor Ben Zhao, Nightshade AI is a response to the rampant misuse of artistic content by tech giants. The team, known for their previous work in digital rights for artists, including the Glaze tool, has taken a significant step forward in the fight for intellectual property rights in the age of AI.

What Does Nightshade AI Creator Intend To Do?

Zhao and his team aim to rebalance the power dynamic between artists and AI firms. By integrating Nightshade with Glaze, they offer artists a choice to protect their work proactively. The tool, planned to be open-sourced, could become more potent as more artists use it, contributing to a collective defense mechanism. It’s not just about protecting individual pieces but upholding the respect for artists’ copyrights at large.

Nightshade AI Poison: A Targeted Attack Against Generative AI

In the escalating tension between digital artists and AI enterprises, Nightshade AI emerges as a sophisticated form of technological guerilla warfare, specifically engineered to disrupt generative AI models. These models, known for creating new content based on their training data, have become controversial for their reliance on massive online image repositories, often sourced without artist consent or compensation.

Nightshade AI operates on a deceptively simple premise: the introduction of ‘toxic’ data into the very heart of these generative AI systems. By making minute adjustments to the artwork — indiscernible to human observers but catastrophic for machine learning algorithms — Nightshade effectively sabotages the training data that these AI models depend on. The result? A generative AI that produces distorted, nonsensical, or functionally useless results.

This targeted attack is more than a mere inconvenience for AI firms; it strikes at the core of the generative process itself. By feeding corrupted data into the learning algorithms, Nightshade ensures that the output is fundamentally flawed. Imagine a scenario where an AI model, trained to generate images of automobiles, starts producing images of aquatic life instead, all because the underlying data has been ‘poisoned’ by Nightshade’s subtle manipulations.

But the implications of Nightshade’s strategy extend beyond immediate disruptions. If widely adopted, this tool could compromise the integrity of vast swaths of training data, forcing AI companies to question the reliability of their sources. This uncertainty, in turn, could necessitate more rigorous data vetting processes, slowing down AI research and development, and increasing operational costs.

Moreover, Nightshade’s open-source nature adds a layer of unpredictability to this equation. As artists and developers customize and propagate their versions of the tool, AI firms could find themselves contending with a hydra-like adversary, ever-changing and adapting in response to countermeasures. This scenario could herald an era of ongoing cyber-conflict, where data integrity becomes a battleground, and artists and tech giants vie for control over digital content’s future.

In this landscape, Nightshade AI is not just a protective measure; it’s a clarion call for change, challenging the status quo of data usage in AI and advocating for a future where artist rights and digital ethics shape technological progress.

Messages On X About Nightshade AI Poison

The Glaze project from Zhao’s team at the University of Chicago on the platform X (formerly Twitter), explaining more about the impetus for Nightshade and how it works.

Thoughts Of Personal Data Security Facing AI Tool

The advent of Nightshade AI underscores pressing concerns surrounding data privacy and intellectual property in the digital age. It serves as a reminder of the potential vulnerabilities within AI technologies, highlighting the need for robust data security measures. Furthermore, it raises ethical questions about the responsibilities of AI developers and the rights of content creators, pointing towards a future where mutual respect and acknowledgment form the foundation of technological advancements.

Read More: 10 best Soulgen AI Alternatives 2023