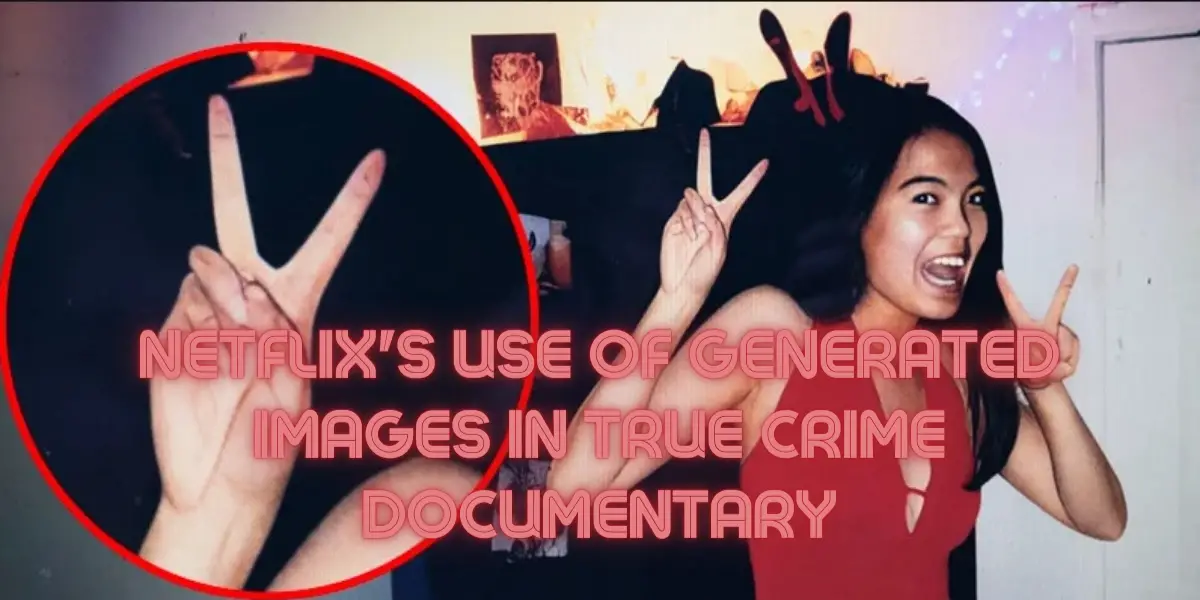

Netflix's Use of Generated Images in True Crime Documentary

Netflix has been at the forefront of entertainment innovation, often utilizing cutting-edge technologies to enhance storytelling. However, the streaming giant recently found itself embroiled in controversy over its alleged use of AI-generated images in the true crime documentary, “What Jennifer Did.” This has sparked a broader discussion on the ethical implications of AI in documentary filmmaking.

Netflix’s documentary ‘What Jennifer Did’ faces controversy over the use of AI-generated images, raising questions about ethical standards and viewer trust in documentary filmmaking.

Table of Contents

What is AI-Generated Content?

AI-generated content refers to material such as images, videos, or text created by artificial intelligence systems rather than human beings. In the context of filmmaking, this can include everything from creating realistic backgrounds to simulating historical figures. AI technologies such as deep learning and generative adversarial networks (GANs) enable the creation of highly realistic content that can be indistinguishable from real footage. While this technology can revolutionize content creation, making it more efficient and expansive, it also raises significant ethical concerns, particularly when viewers are unaware that what they’re seeing isn’t entirely real.

Overview of the Documentary 'What Jennifer Did'

“What Jennifer Did” is a Netflix documentary that delves into the harrowing tale of Jennifer Pan, a young woman convicted of orchestrating a brutal attack on her own parents. The documentary uses a blend of interviews, real courtroom footage, and reconstructed scenes to narrate the events leading up to and following the crime. It attempts to provide a comprehensive view of the psychological and familial pressures that may have driven Pan to such drastic actions.

The Controversy: Ethical Implications of AI in Documentaries

The integration of artificial intelligence in documentary filmmaking has sparked a significant debate concerning the ethical boundaries of its use. As AI technology advances, its application in creating or modifying content in documentaries raises critical questions about transparency, authenticity, and the potential misrepresentation of facts.

Transparency and Viewer Trust

A primary ethical concern with the use of AI in documentaries is the potential compromise of transparency. Viewers trust documentaries to provide factual, unbiased information, and the undisclosed use of AI to alter or generate content can violate this trust. This lack of transparency may lead audiences to question the veracity of the information presented, undermining the documentary’s credibility and the integrity of the filmmakers.

Authenticity of Content

AI-generated content challenges the authenticity of documentaries, which are traditionally valued for their direct portrayal of reality. When elements of a documentary are fabricated or significantly altered by AI, it blurs the line between real events and constructed narratives, potentially misleading viewers about the nature of the events depicted.

Ethical Use of AI

The ethical use of AI in documentaries also encompasses the responsibility of filmmakers to consider the implications of their technological choices. This includes the consideration of how AI-generated alterations might affect the subjects’ portrayal and the overall narrative. Filmmakers must navigate these ethical waters carefully to avoid exploiting or misrepresenting their subjects, particularly in sensitive or controversial topics.

Viewer Reactions to AI Alterations

Viewer response to AI alterations in documentaries has been mixed, reflecting a range of concerns from the impact on documentary authenticity to ethical considerations about the technology’s use.

Impact on Documentary Authenticity

Many viewers feel that the authenticity of documentaries is compromised when AI is used to alter or generate content without disclosure. This shift can affect the documentary’s role as a reliable source of information, leading some viewers to feel deceived if the content they thought was real was partially or wholly generated by AI.

Ethical Concerns

There are significant ethical concerns among viewers, particularly regarding consent and the portrayal of real individuals. When real events and people are depicted using AI-generated imagery, it raises questions about the consent of those portrayed and the potential for harm or misrepresentation.

Demand for Disclosure

In response to these concerns, there is a growing demand among viewers for filmmakers to disclose the use of AI-generated content clearly. This transparency allows viewers to make informed judgments about the content and maintains trust between filmmakers and their audience.

How AI Was Detected in 'What Jennifer Did'?

The detection of AI-generated images in “What Jennifer Did” became a pivotal moment in understanding the implications of AI in documentary filmmaking.

- Viewer Observations: Viewers first noticed irregularities in certain images, such as distorted body parts and unnatural facial features, which did not align with typical video footage.

- Expert Analysis: Technology experts were consulted to examine the suspected AI-generated images. Their analysis confirmed the presence of typical AI artifacts, which are not common in standard photographic processes.

- Media Coverage: As the controversy grew, media outlets began to investigate, comparing the documentary’s visuals to known AI-generated examples and consulting additional AI specialists to verify the claims.

- Official Statements and Denials: Netflix’s initial response to the allegations included denials and explanations, which were closely scrutinized by both the public and tech experts, further fueling the investigation.

Netflix's Response to AI Usage Allegations

Netflix addressed the allegations of AI usage in the documentary “What Jennifer Did” by clarifying their stance on the use of technological tools in content creation. The streaming giant emphasized its commitment to authenticity and transparency, stating that any AI-generated content was used unintentionally and was a result of the creative process, not an attempt to deceive viewers. Netflix reassured its audience that maintaining trust and delivering truthful storytelling remains paramount. They promised to review their content production processes to ensure clearer guidelines and controls around the use of AI, aiming to prevent similar issues in the future and to remain open about the methods and technologies used in their documentaries.

Could Netflix Face Litigation?

The potential for litigation against Netflix over the use of AI-generated images in “What Jennifer Did” hinges on several factors, including the legal framework regarding AI-generated content and the nature of the allegations. If viewers or stakeholders can prove that Netflix intentionally misled them or violated specific regulatory standards, the company could face legal challenges. The absence of clear laws specifically governing AI-generated content in documentaries complicates potential legal actions, but it doesn’t eliminate the possibility of lawsuits based on consumer protection laws, false advertising, or breach of ethical standards. As regulatory bodies catch up with technological advancements, Netflix and other content creators might also face future legal regulations that could retroactively impact their current content production methods.

AI Use in Other Documentaries

As AI technology continues to advance, its integration into documentary filmmaking is becoming more prevalent, stirring both innovative prospects and ethical debates.

Enhancing Historical Accuracy

Some documentaries have leveraged AI to recreate historical environments or to restore archival footage, allowing viewers to experience past events more vividly. This use of AI can enhance the educational value of documentaries, providing a more immersive and engaging viewing experience. However, filmmakers must navigate the fine line between enhancing content and altering historical facts, ensuring they maintain the documentary’s integrity.

Creating Counterfactual Scenarios

AI is also used to simulate “what-if” scenarios, providing visual representations of events that didn’t happen but could have, based on historical data. This speculative use of AI helps to engage audiences in historical and scientific discussions, although it raises questions about the potential for confusing viewers about what is fact versus speculative fiction.

Addressing Ethical and Privacy Concerns

Documentaries dealing with sensitive subjects are increasingly using AI to anonymize visual data, protecting the privacy of individuals while still telling compelling stories. This responsible use of AI respects subject privacy and complies with legal standards, yet it also necessitates transparency about the alterations for the sake of viewer trust and ethical integrity.

Conclusion

Netflix’s alleged use of AI-generated images in “What Jennifer Did” has opened up a complex debate on the ethical use of artificial intelligence in documentary filmmaking. While AI holds the potential to transform content creation, it must be used responsibly, with a firm commitment to transparency and authenticity. As we navigate this new landscape, the balance between innovation and integrity will define the future of documentary storytelling.