How To Use MemGPT With Open-Source Models: A Detailed Guide

MemGPT has emerged as a revolutionary tool in the realm of AI, offering users the ability to harness the power of advanced language models. With the integration of open-source models, its capabilities have expanded, making it a go-to choice for many enthusiasts and professionals. This guide aims to provide beginners with a comprehensive understanding of MemGPT and its synergy with open-source models.

Table of Contents

What Is MemGPT?

MemGPT, a product of OpenAI, is a cutting-edge language model that stands out for its memory management capabilities. It operates within a memory hierarchy, managing both working context and external context, and uses a function calling paradigm to interact with its environment.

Key Features Of MemGPT

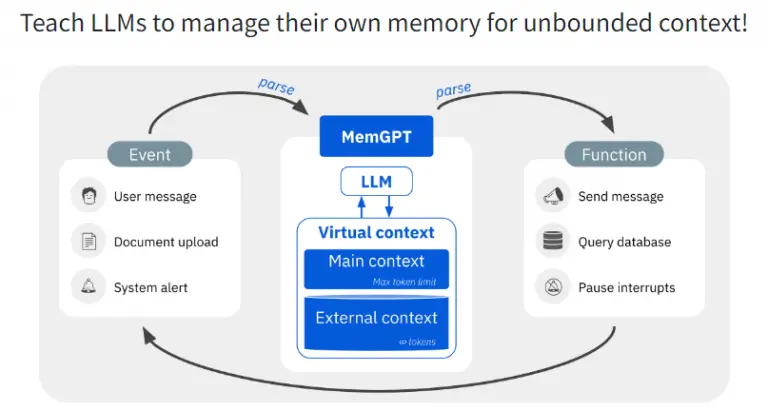

MemGPT, inspired by operating systems, stands out as a unique Large Language Model (LLM) system designed to manage the constrained context windows of big language models. Here are its key features:

- Virtual Context Management: MemGPT introduces a method known as virtual context management. This method, inspired by hierarchical memory architectures in conventional operating systems, allows the model to use context outside of the typically constrained context windows.

- Multi-level Memory Architecture: MemGPT comprises two primary memory types: the main context (akin to RAM in computers) and the external context (similar to disk storage). The main context is the typical fixed-context window seen in current language models, while any data stored outside this window is referred to as the external context.

- System Message or “Preprompt”: In MemGPT, the LLM inputs are considered the system’s main context. A significant portion of these tokens is used to store a system message or “preprompt” that defines the nature of the interaction. This preprompt can vary from simple primers to complex instructions.

- Function Calling Paradigm: MemGPT is optimized for function calling. It can understand and respond to function schemas, making it more dynamic in its interactions.

- Unbounded Context Handling: MemGPT can manage unbounded contexts using LLMs with finite context windows. It achieves this by combining a memory hierarchy, OS functions, and event-based control flow.

What Is The Advantage Of Using Open-Source Models With MemGPT?

- Overcoming Context Limitations: One of the primary advantages of MemGPT is its ability to handle extensive contexts, which is a limitation in many LLMs. By integrating open-source models, users can leverage MemGPT’s capabilities to analyze large documents that exceed the context restrictions of current LLMs.

- Enhanced Conversational Abilities: MemGPT, when used with open-source models, can maintain long-term memory, consistency, and adaptability across prolonged conversations. This is especially beneficial for chatbots and conversational agents.

- Flexibility and Customization: Open-source models offer flexibility. Users can experiment with different models, ensuring compatibility with MemGPT. Moreover, the open-source nature means continuous community-driven improvements and updates.

- Cost-Effective: Using open-source models can be cost-effective. Users can access advanced features without incurring high costs, making it a viable option for startups and individual developers.

- Community Support: Open-source models come with the backing of a robust community. Users can benefit from community discussions, guides, and troubleshooting tips, ensuring smooth integration with MemGPT.

In conclusion, MemGPT’s unique features, combined with the advantages of using open-source models, make it a powerful tool in the AI landscape. Whether it’s for document analysis or enhancing conversational agents, MemGPT offers solutions that can significantly improve performance and user experience.

How To Set Up MemGPT?

Setting up MemGPT, especially with open-source models, can seem daunting, but with the right steps, it becomes a straightforward process. Here’s a detailed, step-by-step guide to help you set up MemGPT:

- Deploying on RunPod:

- Access the RunPod platform.

- Click on “Secure Cloud.”

- Scroll down and select a GPU that aligns with your requirements.

- Click “Deploy” to start the deployment. The default settings are usually sufficient.

- Wait patiently for the instance to load completely.

- Connect to HTTP Service:

- Once deployed, click “Connect” to establish a connection.

- Choose the “Connect to HTTP Service” option.

- Set Port 7860 as the interface for the API.

- Download the Model:

- For this guide, we’ll use the “Dolphin 2.0 mistral 7B” model. However, you can choose any compatible model.

- Copy the model card information.

- Navigate to the “Model” tab in the Text Generation Web UI.

- Paste the model card information.

- Click “Download” to start the model download process.

- Load the Model:

- Use the Model Loader Transformers to load the downloaded model into memory.

- Refresh the list to see the newly downloaded model.

- Select the model by toggling from “none” to the new model.

- Click “Load” to successfully load the model into the system.

- Set Backend Type:

- Navigate to the “Session” tab.

- Set the backend type to “web UI.” No additional flags or extensions are needed.

- Copy the API Endpoint URL:

- Copy the URL of your RunPod instance. This will be the API endpoint for MemGPT.

How To Use MemGPT With Open-Source Models?

Once you’ve set up MemGPT, integrating it with open-source models is the next step:

- Clone the MemGPT Repository:

- Use the command: git clone <GitHub URL> to clone the MemGPT repository from GitHub.

- Alternatively, you can use the provided MemGPT module, which eliminates the need to clone the repository.

- Navigate to the MemGPT Directory:

- Use the command: cd mgpt to change your current directory to the MemGPT directory.

- Set the API Endpoint:

- Use the command: export OPENAIOR_BASE=<RunPod URL>:5000 to set the API endpoint.

- Set Backend Type:

- Use the command: export BACKEND_TYPE=web UI to set the backend type.

- Create and Activate a Conda Environment:

- Use the commands:

- conda create -n auto-mem-gpt python=3.13 to create a Conda environment.

- conda activate auto-mem-gpt to activate the environment.

- Install Necessary Packages:

- Use the command: pip install -r requirements.txt to install the required packages.

- Start MemGPT:

- Use the command: python3 main.py -noore verify to start MemGPT and verify the installation.

How To Run MemGPT On My Local Machine?

Running MemGPT on your local machine follows a similar process:

- Initiate MemGPT:

- Use the command: python3 main.py to start MemGPT.

- Follow the prompts to select the model, persona, and user type.

- Interact with MemGPT:

- Once MemGPT is running, you can interact with it by typing your prompts. MemGPT will provide responses based on the model and persona you’ve selected.

In conclusion, MemGPT offers a versatile platform for integrating with open-source models. By following this detailed guide, you can seamlessly set up, integrate, and run MemGPT, unlocking its full potential for various applications.

Is It Safe To Use MemGPT?

While MemGPT is a product of OpenAI, a renowned entity in the AI world, users should always exercise caution. It’s essential to stay updated with the latest security patches and be aware of any potential vulnerabilities, especially when integrating third-party open-source models.

Conclusion

MemGPT, with its advanced features and compatibility with open-source models, offers a promising avenue for AI enthusiasts. By understanding its functionalities and ensuring safe practices, users can harness its full potential and achieve remarkable results.

FAQ

MemGPT is designed for memory management and uses a function calling paradigm for dynamic interactions.

While MemGPT is compatible with various models, users should ensure the chosen model supports the required function calling setup.

Platforms like GitHub offer comprehensive guides and community discussions that can assist users in the integration process.